The distributed CMS: How the decoupled CMS endgame will impact your organization

June 6, 2019Este artigo está também disponível em português. This article is also available in Portuguese.

The decoupled content management system (CMS) is both reinventing the way we think about content management and evolving how we implement and deploy the software architectures surrounding it. Today, what I call the third wave of the content management system, namely the proliferation of headless or decoupled CMSs that focus on a separation of concerns between structured content and its presentation, is in full swing. But the next wave, the distributed CMS, is just around the corner.

Organizations everywhere are now contending with two seemingly divergent trends: a channel explosion in which users expect content at their beck and call on every device and every form factor, and an architectural decoupling in which CMSs are no longer contiguously interconnected software systems but rather networks of interchangeable services with limited purposes. In short, they are seeking distributed content management, not simply decoupled content management.

In April, I had the privilege of speaking at the Drupal Executive Summit at DrupalCon Seattle, an event where some of the largest organizations were represented. I was invited to give a brief presentation about how decoupled Drupal—and the decoupled CMS trend at large—will impact organizations with large web presences, irrespective of where they are on their digital transformation trajectories. As there was no recording, I’ll present my insights here.

Content everywhere: The channel explosion

In my book Decoupled Drupal in Practice (Apress, 2018), I describe the ongoing channel explosion as something akin to the Cambrian explosion, a point in the fossil record at which diversification of life forms occurred at an unprecedented rate. For instance, when the first iteration of Drupal, an open-source CMS powering about 2% of the web, was released in the form of Drop.org in 2000, the web primarily consisted of text and images with interactions governed by keyboard and mouse.

In 2012, a second significant diversification occurred in the form of responsive web design and the notion that “content is like water” spread to even the most ossified recesses of monolithic CMSs, which are contiguous, end-to-end, and inseparable systems including both structured content and its presentation. In the Drupal landscape, the Spark initiative worked diligently not only to ensure that all administrative interfaces were responsive but also to build interfaces allowing content editors and marketers to control and manipulate how responsive design worked on their websites.

A corresponding and related trend of mobile application development using native technologies led to a wave of software development kits (SDKs) being created for the purposes of building Android or iOS applications. Among the earliest examples of this was the Drupal iOS SDK created by Kyle Browning, which later became known as Waterwheel.swift. Now, not only could editors have control over visual representation across devices, developers could also build front ends for CMSs in the native code of those other devices and unleash greater growth at the fringes of the CMS ecosystem.

Over the last five years, mobile application frameworks have given way to a slew of technologies allowing for the integration of CMSs with form factors like the Apple Watch, digital signage, Roku and set-top boxes, and augmented reality overlays. In 2017, for instance, I had the honor of leading the Acquia Labs team in architecting and implementing Ask GeorgiaGov, the first-ever Alexa skill for citizens of the state of Georgia—and all built as an additional layer atop a Drupal 7 site.

The channel explosion has engendered challenges on the marketing side of the equation, however, and has led to an incongruity in CMS value propositions. Editors who formerly had access to contextual interfaces manipulating all manner of visual features like layout and drag-and-drop controls no longer have such capabilities available to them on the various front ends that are ubiquitous today (and Mark Boulton has argued that this is a good thing). This means more developer resources, more infrastructure, and more complexity—all in sync with the ways in which user expectations are evolving as well.

A brief history of modern web development

Before the CMS, websites consisted primarily of static pages in the form of flat files, uploaded to an FTP server. In the late 1990s, the term dynamic primarily referred to server-side operations that required full page refreshes in order for users to see a different application state. For example, form submissions were often handled by a Perl implementation on the server. The first wave of the CMS consisted of server-side static site generators like Interwoven, Vignette, Teamsite, and others.

The second wave of the CMS—represented by storied heavyweights like Drupal and WordPress in open source and CQ5 and Sitecore in proprietary software—was born during this era of dynamic server-side applications and were, in fact, more functionally powerful versions of the same. A typical Drupal site in early versions, for example, could provide a newsletter subscription form and would require two server round trips: one to render the initial state of the form, and a second to provide a success message after a full page refresh.

With the dissemination of jQuery and DHTML in the mid-2000s, beginning with revolutionary web applications like Gmail, Google Maps, and Basecamp, dynamic operations no longer were limited to the server, and dynamic in-page interactions could occur on the page without requiring a full page refresh or server round trip. A jQuery widget on a newsletter subscription form could issue an Ajax request to the server and render a spinner and success message without ever requiring what the user saw to change at all.

The first JavaScript frameworks during the initial stages of the JavaScript renaissance adhered to this same model but made rendering capabilities and DOM manipulation features much more powerful. Angular’s directives reinvented the way developers expressed logic in markup, and React’s Virtual DOM upended the prevailing approach to client-side rendering through intelligent diffing. In a CMS context, a JavaScript framework could lie within a CMS front end and take over from the server-side CMS-rendered initial markup via HTTP requests.

The transition was made complete with Node.js and the availability of universal JavaScript, which paved the way for code sharing across client and server. No longer were the server and client two irreconcilable sides of the equation; now a single framework could be used not only to generate an initial render synchronously flushed to the browser but also to rehydrate rendered content asynchronously through client-side requests to a web service.

Because the second wave of CMSs (monolithic CMS) was so ill-equipped to handle client-side operations and the sort of dynamic interactions that users came to expect in the early 2010s, headless or decoupled CMS as a paradigm rapidly became essential for developers interested in performance at the expense of more ossified editorial capabilities that only functioned in a monolithic, end-to-end context. For more background on this, consult my book Decoupled Drupal in Practice.

Headless and decoupled: The third wave of the CMS

Whereas the JavaScript renaissance ushered in a period of substantial technical innovation in the front end and relevant changes in modern tooling and web development, it was predominantly a developer-led shift that only percolated later to engineering management and workflow considerations.

The decoupled CMS is difficult to pigeonhole into a single definition, and differences in terminology across CMS communities further stymie such unification around a compelling heuristic for stakeholders. But simply put, a decoupled CMS is the use of a CMS as a content service for distinct consumer applications, whether web, native, or something else. This holds true regardless of whether the CMS was purpose-built for such an approach (like many API-first CMSs today such as Contentful and Prismic) or not (like API-enabled CMSs like Drupal and WordPress).

Similar to the early 20th century, which witnessed acceleration in manufacturing processes and factory floors, the decoupled CMS is being driven in part by a desire for better interchangeable parts in CMS workflows. Due to the legacy of how and in what context monolithic CMSs were built, it’s nearly impossible to decouple an end-to-end CMS into distinct services that handle single concerns, as encouraged by the microservices era. If only the CMS had waited just a bit longer to evolve in our industry!

There are three reasons why decoupled CMS has gained such steam, and all of these are accounted for in the initial chapters of my book Decoupled Drupal in Practice: pipelined development, interchangeable presentation layers (better updates), and API convergence. I cover each of these in turn in the coming sections.

Pipelined development

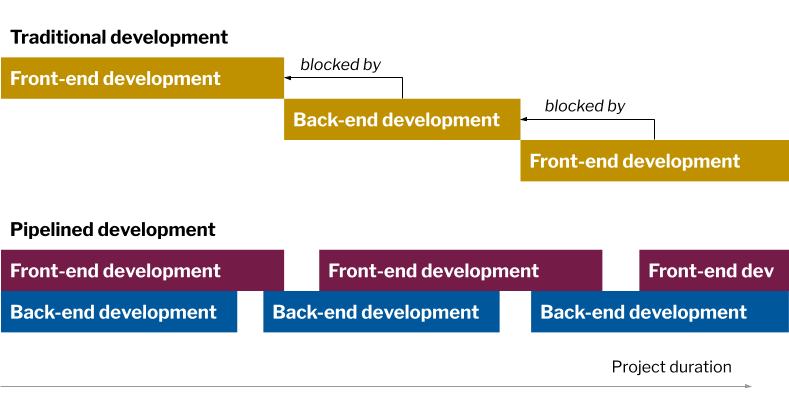

In traditional CMS implementation workflows, one of the most common complaints concerns dependencies that block and are inextricable from one another. In Drupal development, for example, a front-end developer building a Drupal theme may need to wait for data to be provided by a back-end Drupal developer in the form of a variable accessible in a Twig template. This leads to workflows in which developers are forced to wait for each other’s work to be complete before pressing forward.

In decoupled CMS workflows, developers can work in parallel and on distinct components of the decoupled CMS architecture without interfering with or hindering each other’s work. A front-end developer can easily work on a new feature in a JavaScript application at the same time that a back-end developer improves the way data is exposed through the underlying content API. In this way, front-end and back-end development can be pipelined, though this of course requires a steady hand when it comes to project management.

Interchangeable presentation layers

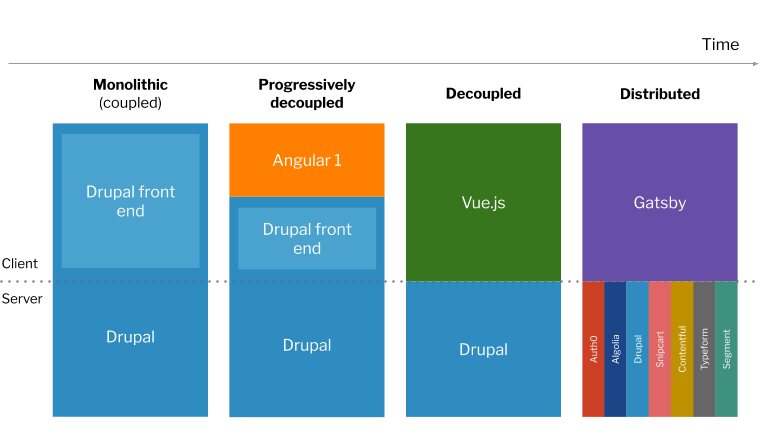

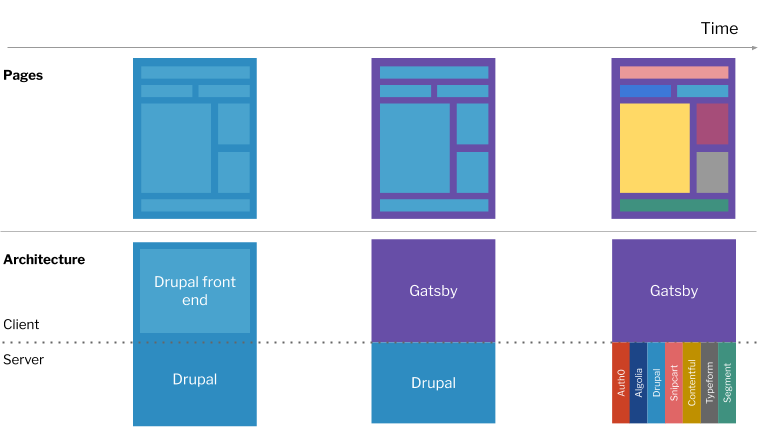

One of the most compelling reasons that enterprise architects have for selecting a decoupled CMS architecture is the interchangeability of presentation layers, which improves updates and maintenance down the road. For example, a Drupal CMS front end may evolve from being built in Twig and PHP using Drupal’s monolithic approach towards progressive decoupling, in which a JavaScript front end such as Angular is interpolated into the existing Drupal front end.

This approach can then be substituted for a fully decoupled Drupal approach in which Angular is not only rendered client-side but also server-side through Node.js and an initial rendered application state. Several years later, the sands have shifted, and the front-end development team may opt to replace the Angular application with Vue.js, and then with Gatsby. All the while, the CMS remains the unchanging foundation undergirding this architecture, meaning that front-end innovation can be pursued at the same time as the preservation of back-end stability for business reasons.

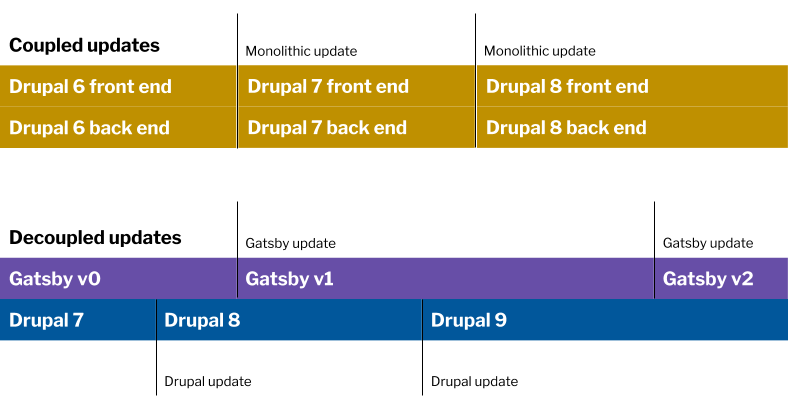

Interchangeability in presentation layers also means that when major version updates or security vulnerabilities occur, updates can happen separately from one another, on distinct portions of the architecture. Whereas a monolithic WordPress update would require both front-end and back-end developers to stop work due to the needed update, a headless WordPress implementation with Gatsby on the front end could successfully update WordPress and Gatsby versions at different frequencies according to not only the release cadences of the respective technologies but also the whims of developer teams. This means that these CMS architectures undergo incremental evolvability, in which different portions are advanced at different rates.

API convergence

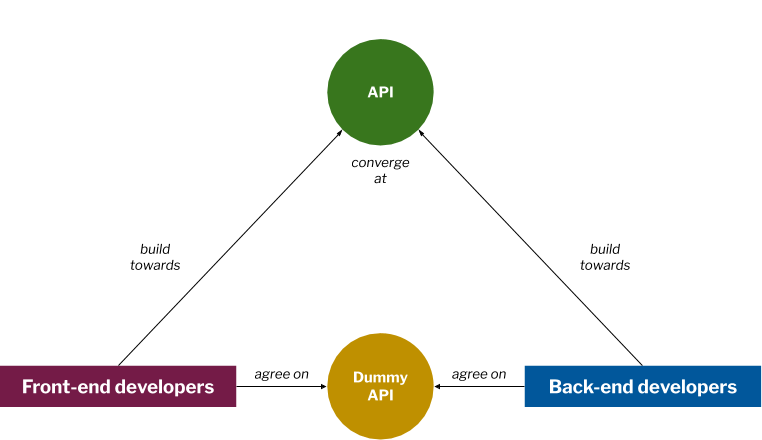

While past CMS workflows may have involved a front-end developer dummying certain templated data in a CMS theme until the back-end developer has exposed a property to a template, decoupled CMS workflows revolve around what I call API convergence, a concept related to pipelined development. Due to the wide gulf between the front-end and back-end technologies leveraged in a decoupled CMS implementation, developers should first agree on where they will end up. Rather than blocking one another by working on the entire system together, front-end and back-end developer teams collaborate together solely on the API before going on their merry ways.

To illustrate this concept, consider a decoupled CMS project entering discovery. At the discovery stage, front-end developers and back-end developers agree together on a dummy API that will form the nexus of all work until the finalized API is made available. In short, this dummy API contains the data model that front-end developers will test their code against and that back-end developers will build their APIs towards. By the end of the project, back-end developers have provisioned the necessary APIs according to the agreed API specification, and front-end developers have built according to that same specification. At the flip of a switch, the entire architecture should work without any additional work required.

The distributed CMS and the content mesh: The fourth wave of the CMS

But the third wave of the CMS, where the structure of content and its presentation are separated into two distinct technologies, is not where this story ends. There is a coming fourth wave of the CMS in the form of a distributed CMS that is truly decoupled in every sense of the word.

Gatsby co-founder Sam Bhagwat recently authored a series of blog posts having to do with the content mesh, an idea that marries microservices concepts with an agnostic front-end layer that is solely concerned with presentation. The content mesh has antecedents in the microservices and orchestration landscapes, where a service mesh consists of a variety of intermediary services between systems.

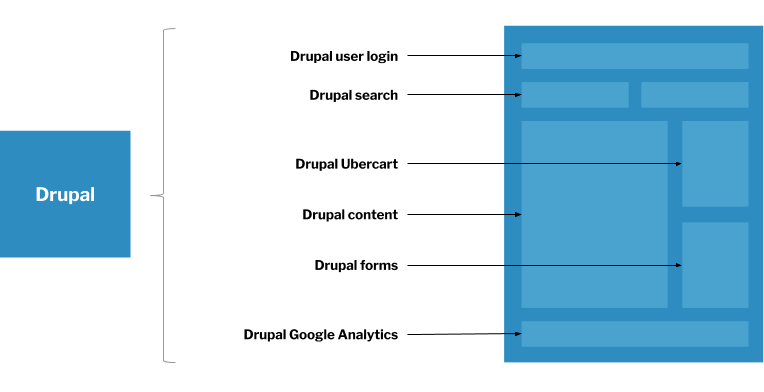

In a content mesh, the page itself becomes decoupled as well, and the CMS becomes merely a collection of modular and additive capabilities in the form of distinct services, whether custom-built or provided as part of a SaaS solution. Take, for instance, a typical e-commerce site leveraging Drupal. Traditional CMSs like Drupal formerly handled all concerns at the expense of a simplified developer experience, with user login and registration, site search, cart functionality, content management, form handling, and other services like Salesforce and Google Analytics wrapped up in Drupal modules or core features.

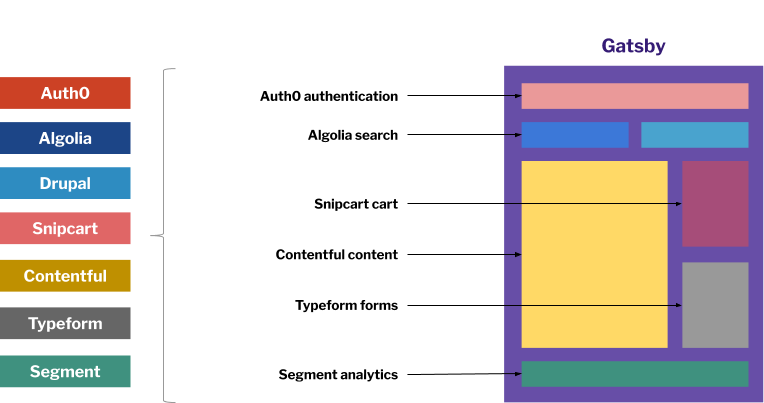

But the content mesh as described by Bhagwat unleashes much more freedom when it comes to interchangeable services on the front end. In this way, whereas the third wave of the CMS—the headless CMS—is about interchangeable presentation layers, the fourth wave of the CMS—the distributed CMS—is about multiple interchangeable services within a single presentation layer.

Consider, for example, that user authentication can easily be provided cheaply with Auth0, search with Algolia, cart functionality with Snipcart, content management with Contentful, form handling with Typeform, and analytics with Segment. The list goes on. In short, not only are each of these services interchangeable, they can also be combined with one another to appear as a seamless, fully integrated whole.

In a typical evolution for a company working with modern CMS approaches, an organization will first construct a site using a monolithic CMS with an integrated front end such as Drupal before moving into the third wave or headless stage with a decoupled presentation layer provided by JavaScript technologies like React or Vue.js. But the fourth wave—the distributed CMS—will be one in which not only presentation layers are interchangeable with one another (Ember for Angular, Vue.js for React), but also services within those presentation layers (Snipcart for Drupal’s own Ubercart, Typeform for Drupal’s own Form API).

Conclusion

The distributed CMS is already here, thanks to technologies like Gatsby and a widening array of services available for developers who simply need interchangeable single-purpose features like analytics or a cart widget. The headless or decoupled CMS is rapidly yielding to a more truly and authentically decoupled—i.e. distributed—CMS, not merely headless, in which a more modern and modular structure wins the day. In other words, the distributed CMS is decoupled not only from its content repository, but also from the very idea that a repository is a single source for data.

For organizations who are just beginning to consider some of the implications of the decoupled CMS paradigm, fear not—decoupling your CMS, while fraught with risk in the past, will open a range of possibilities and expand flexibility in ways that your developers and your marketers will not expect. You will have the freedom to choose from more affordable services better-tuned to your needs, and you will also have the resourcing leeway to hire developers working with a wider spectrum of technologies. In the distributed CMS, you are no longer inextricably tied to a single technology stack; build what you want in the technology you choose, and use a presentation layer like Gatsby to bring it all together and abstract away the varied technology stacks underneath.

Just as interchangeable parts reinvented how the global economy envisaged manufacturing, the decoupled CMS has facilitated interchangeable presentation layers by enabling new developer workflows and unlocking better maintainability from an architectural standpoint. Soon, the distributed CMS will open the door to interchangeable in-page services that will not only revolutionize how we approach the assembly of websites by content creators and marketers but will also redefine the business and vendor landscape surrounding the CMS for decades to come.

Soon, the CMS as a coherent, monolithic whole will cease to exist (in many ways, it already has), and we will need new terms and new ways to describe the content mesh and the distributed CMS architectures that will enable a channel-rich and technically robust future for content. The future of the CMS is here, and it is distributed.

Special thanks to Deane Barker, Kyle Mathews, and Sam Bhagwat for their feedback during the writing process.